Security Misconfiguration

SMB Signing Not Required – Linux

TCM-KB-INT-002

Last Updated: 6/23/2023

Linux Operating Systems

The recommended remediation steps and configurations described in this response would primarily affect endpoint systems running the Linux Operating System.

SMB

SMB refers to Server Message Block.

A small message block refers to a compact unit of data transmission used in communication protocols. It typically contains a limited amount of information, such as a command, status update, or a small portion of a larger message, allowing for efficient and rapid exchange of data between devices or systems.

Contributor

Joe Helle

Chief Hacking Officer

This Knowledge Base Article was submitted by: Joe Helle.

Recent Blogs

Ethically Hack AI | Part 2 – Prompt Injection

This blog will demonstrate how various methods of prompt injection, including jailbreaking, can be used to compromise AI chatbots during ethical testing.

Security Misconfiguration

Issue

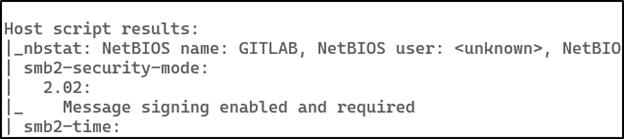

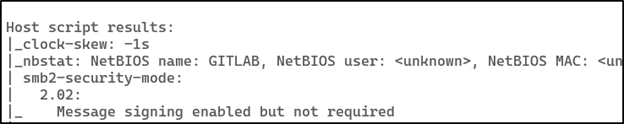

SMB signing is not required in the SMB share hosted on a Linux endpoint.

Recommended Remediation

The following outlines the recommended steps that the systems and network administrators should take in order to secure the environment.

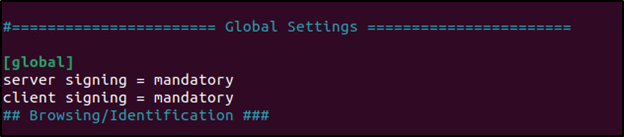

Access the Linux endpoint with either the root user account, or a user account that has sudo privileges. Utilizing a text editor tool such as Nano or Vim, add the following lines underneath the [global] tag at the top of the file.

server signing = mandatory

client signing = mandatory

It is necessary to restart the Samba service. Execute the following command with sudo or root privileges:

systemctl restart smbd.service

Check the change was made by running Nmap against the endpoint and reviewing the results.

See What We Can Do For You

Download a sample penetration test report to see the results we can deliver for your organization.

See How We Can Secure Your Assets

Let’s talk about how TCM Security can solve your cybersecurity needs. Give us a call, send us an e-mail, or fill out the contact form below to get started.